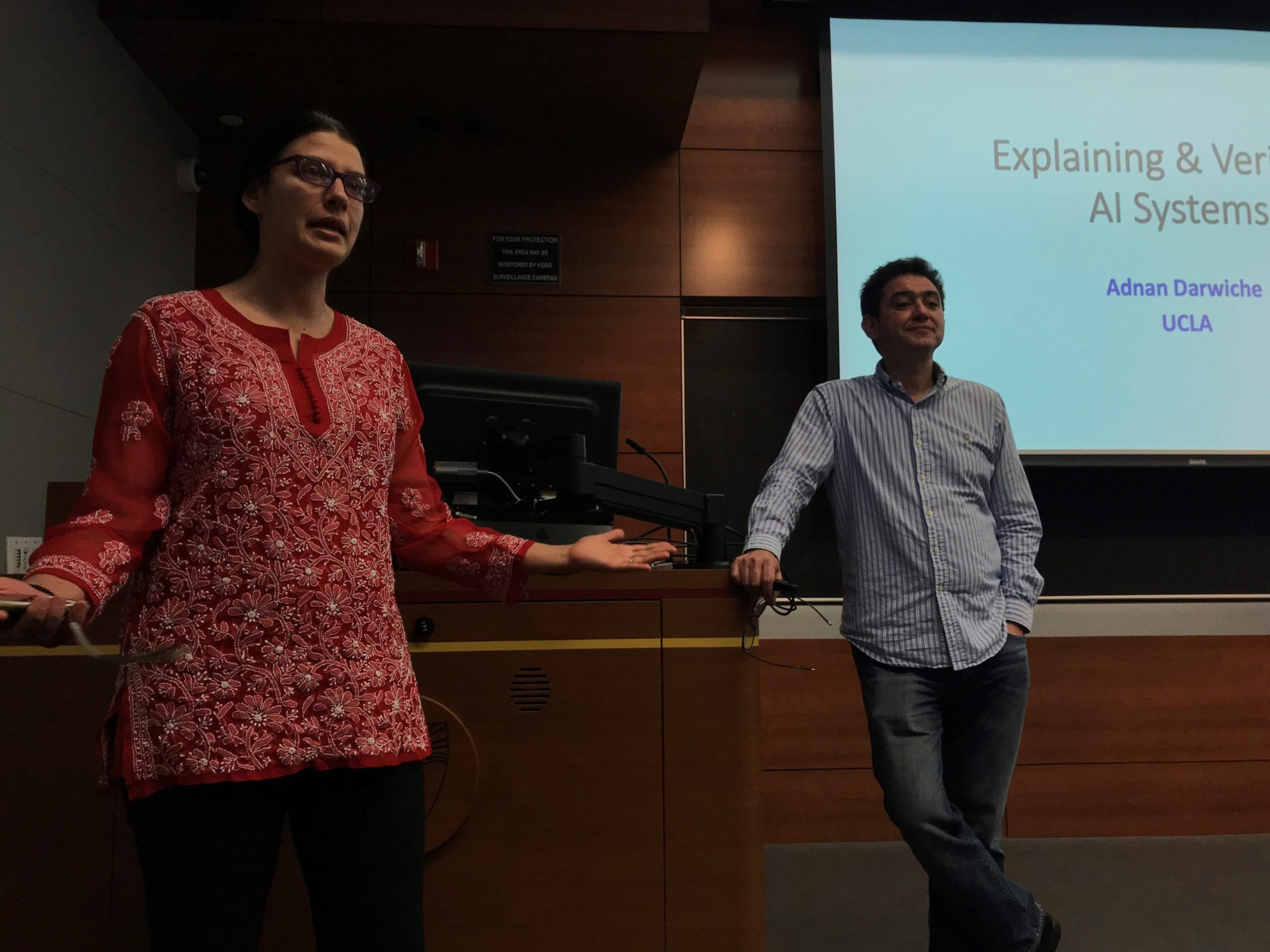

USC CAIS Associate Director Bistra Dilkina (left) introducing Dr. Adnan Darwiche.

As artificial intelligence becomes more established in critical areas, such as medicine and education, researchers are developing new techniques to validate and explain these models. Dr. Adnan Darwiche spoke of his work with Arthur Choi and Andy Shih on giving AI practitioners a set of fast tools to not only understand the models they develop, but also to automatically catch systematic modeling mistakes before they reach production.

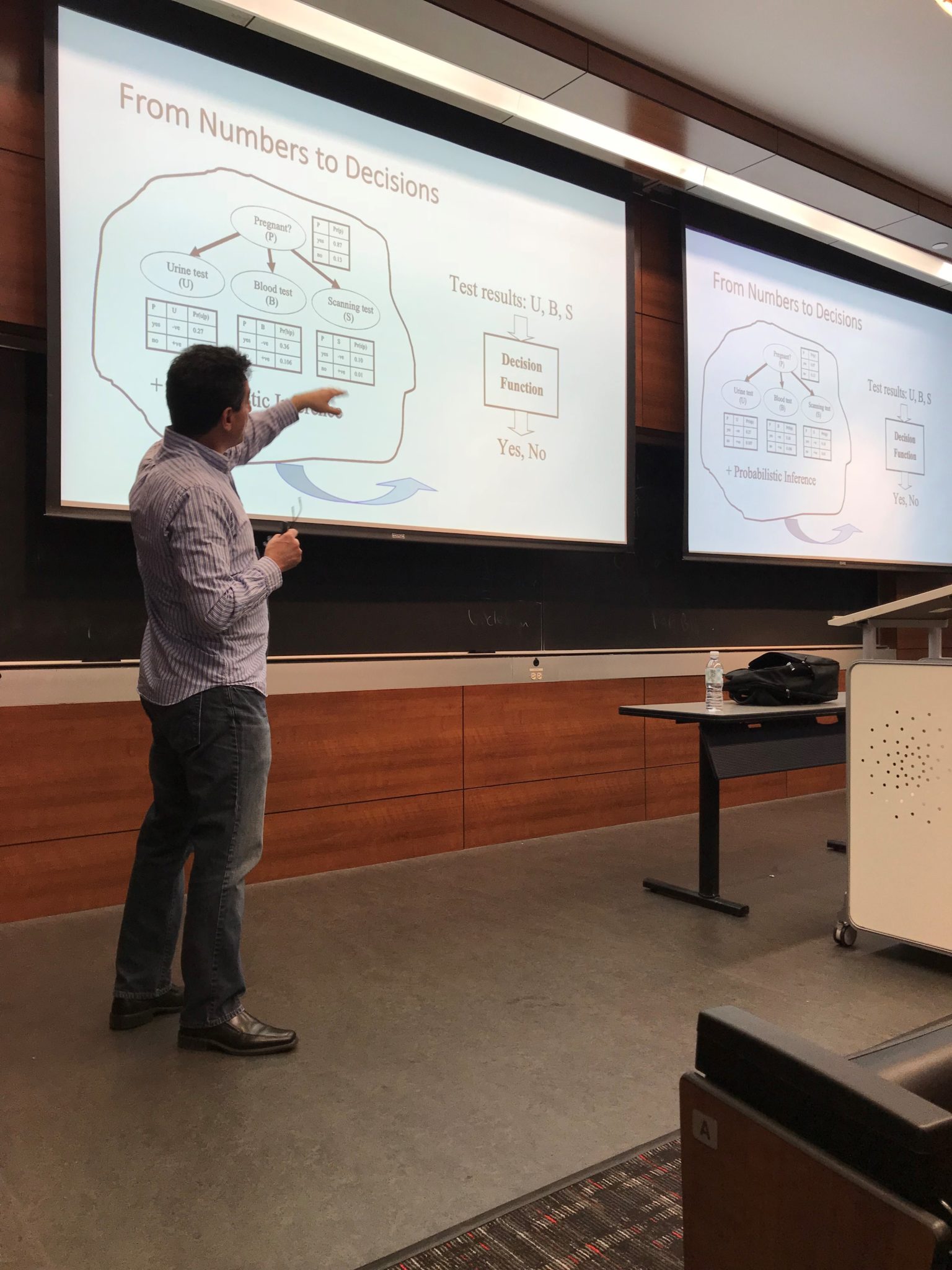

Dr. Darwiche and his collaborators have developed techniques to compile machine learning models from the expressive Bayesian Network to the highly regularized Naïve Bayes approach. They can view these approaches as ultimately producing a binary yes/no decision based on features. In cancer screening for example, this can mean determining whether the cancer is malignant or benign based on features of the patient. From this perspective, Dr. Darwiche compiles these machine learning models to an ordered binary decision diagram which describes the classifier as a flowchart leading to a yes/no decision. He noted that this representation generalizes a decision tree in that a given node can have multiple parents, enabling the ordered decision diagram to represent the same decision-making process in an exponentially more compact form. Furthermore, as in a standard flow chart, Dr. Darwiche explained that nodes in the decision diagram can be interpreted by examining the paths and corresponding sets of features leading to any node.

Given this interpretable formulation, Dr. Darwiche went on to explain the techniques his team developed to automatically examine vital properties from the resulting interpretable classification model. He demonstrated that with this representation we can immediately get explanations of why an input was classified the way it was by following the flowchart. He presented two manners in which we can interpret a model’s classification: minimum cardinality (MC) explanations, and prime implicant (PI) explanations. MC explanations consist of the smallest set of positive features that are responsible for a yes decision, and similarly the smallest set of negative features that are responsible for a no decision. PI explanations on the other hand are represented by the set of features for which the other features become irrelevant to the ultimate decision outputted by the model. In fact, once compiled to the ordered binary decision diagram, these objects of interest can be computed in linear time.

In addition to explaining individual decisions, the framework can efficiently verify desired properties of the machine learning models as a whole. For instance, in an educational setting, researchers have built a model that determines whether a student will pass given their exam answers. In this setting, we want to ensure that the model is monotone, or that if Susan’s correct answers include Jack’s correct answers, then Susan should pass if Jack passes. Dr. Darwiche went on to explain that this framework can verify a model’s monotonicity in quadratic time, and in the case that it is not monotone, give a valid counterexample. In fact, compiling the educational predictive model demonstrated that it is not always monotone. Furthermore, in a cancer classification setting, his approach demonstrated that given two identical patients, one with a personal history, and one without a personal history, the model would predict the one with personal history as having benign cancer and the other as having malignant cancer. As such, further model iterations would be required before deploying this system in practice.

Overall, Dr. Darwiche explained that machine learning approaches tend to fit functions rather than build models and represent knowledge. His approach enables those in AI to automatically distill knowledge gained from a learned model and rigorously test that the connections it learns mesh with the assumptions of practitioners. This fusion of model based and functional approaches to AI enables machines to learn parameters from data while ensuring the model robustness and interpretability required for implementation in the real world.