Have you ever wondered, “With the amount of new medical research published every day, how can doctors manage to use all of that knowledge?” Dr. Xiang Ren’s research is directly addressing that question (and more) by developing machine learning frameworks to transform unstructured text data into actionable knowledge. His algorithms learn to read through normal text and identify important entities like “Heart Attack” or “Aspirin” as well as the relationships between entities, such as Aspirin→Therapy For→Heart Attack. Once these entities and relationships are identified, they can be stored in one grand knowledge base and made efficiently searchable by people.

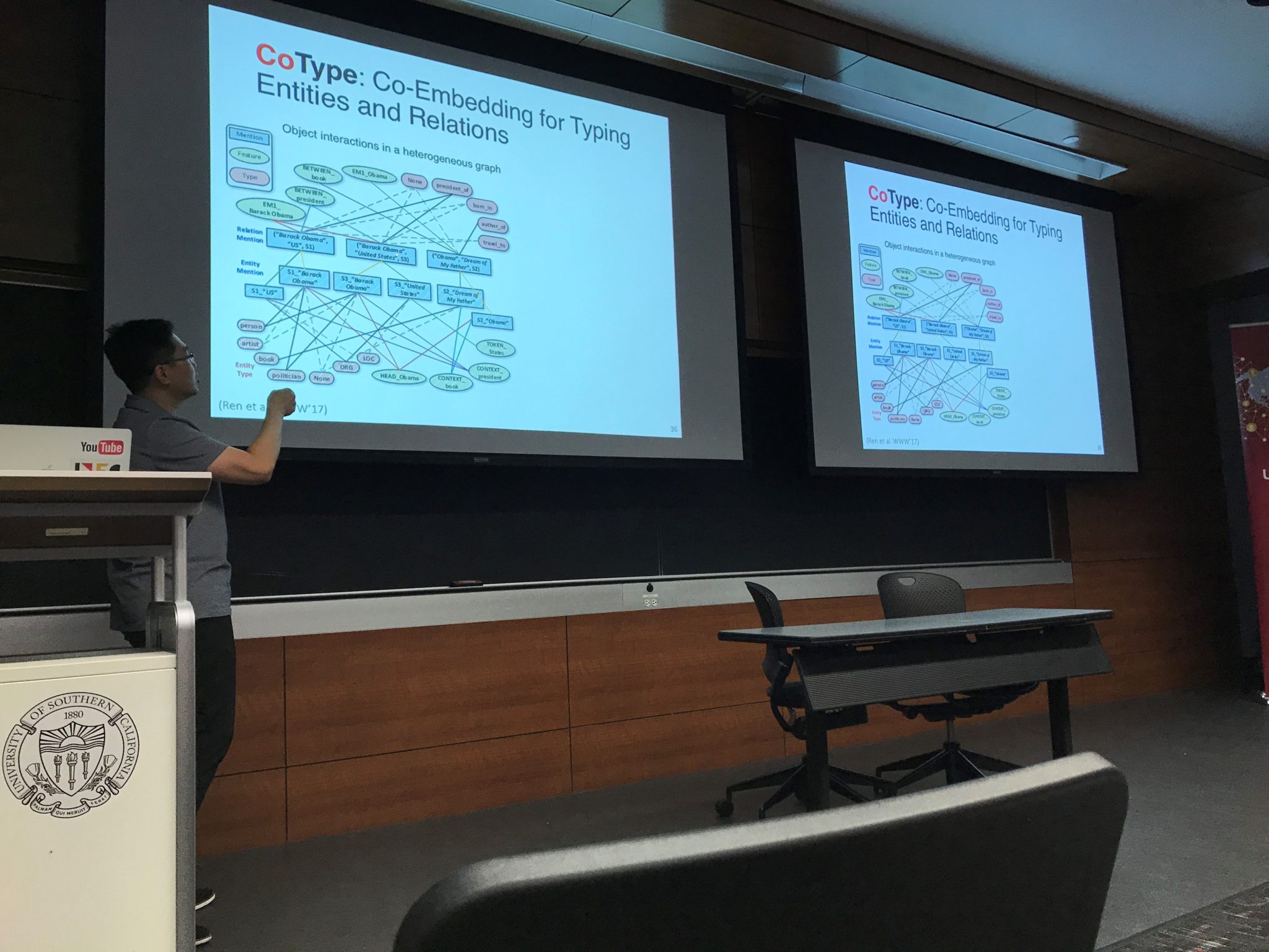

Dr. Ren recently applied his state-of-the-art algorithm, CoType, to 4 million publication abstracts on PubMed, a free search engine for life sciences and biomedical research. Using the millions of entities and relationships extracted from these abstracts, they built a knowledge base and an application for doctors to be able to sort through the data. Doctors were then able to use the application to search, “What organic chemicals can prevent heart disease?” Rather than manually sifting through a list of publications on Google, doctors instead saw an image with on-screen arrows pointing from “Heart Disease” to the myriad of chemicals that have been studied for preventing the disease. By simply clicking on one of the arrows, the doctors could see a list of the exact sentences from the papers describing the chemicals’ effect on the disease, getting them to the information they needed to make decisions faster. The best part? Dr. Ren’s work is efficient and scalable. A previous attempt by humans to create such an application took 2500 man hours to build ~2600 facts from 1100 sentences. But CoType? CoType used less than 1 hour to extract more than 26 million facts from 4 million publications.

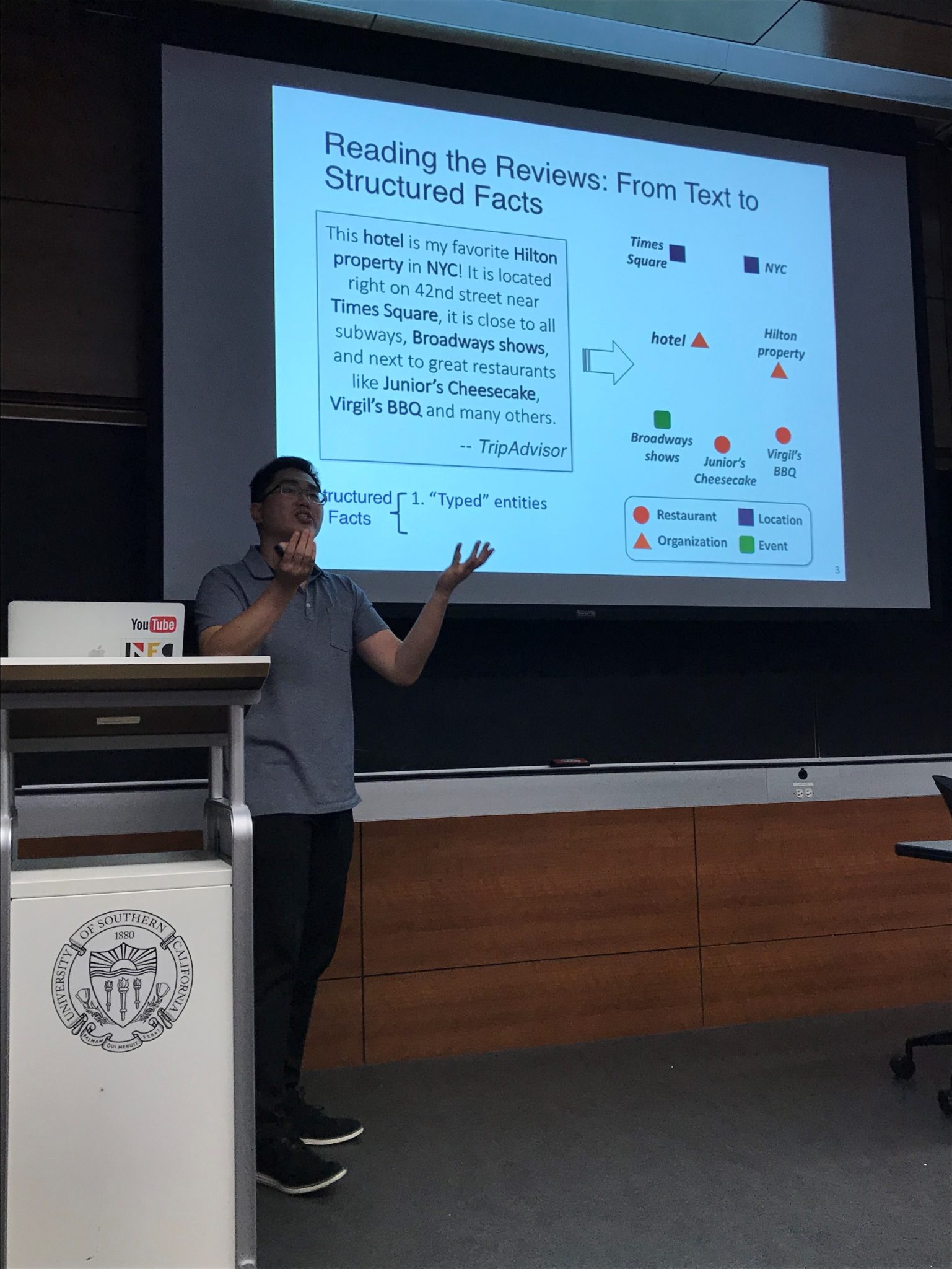

Dr. Ren also has a large body of work in industrial domains, such as extracting structure from Yelp or TripAdvisor reviews. A common first step in these domains is to compare entities from the text to entities on Wikipedia which serves as a sort of “background” knowledge base. Using information from Wikipedia, he can confidently identify some entities (restaurant, city, hotel, etc.), then intelligently utilize sentence context to propagate confidence forward to unknown entities. This work has been used to develop “collections” of hotels and restaurants simply from the text in their reviews, rather than by their location. Creating collections based on common “soft features” (nightlife, music, ambience, etc.) is often preferable for suggesting appropriate content to users rather simply suggesting by shared “hard features” such as location.

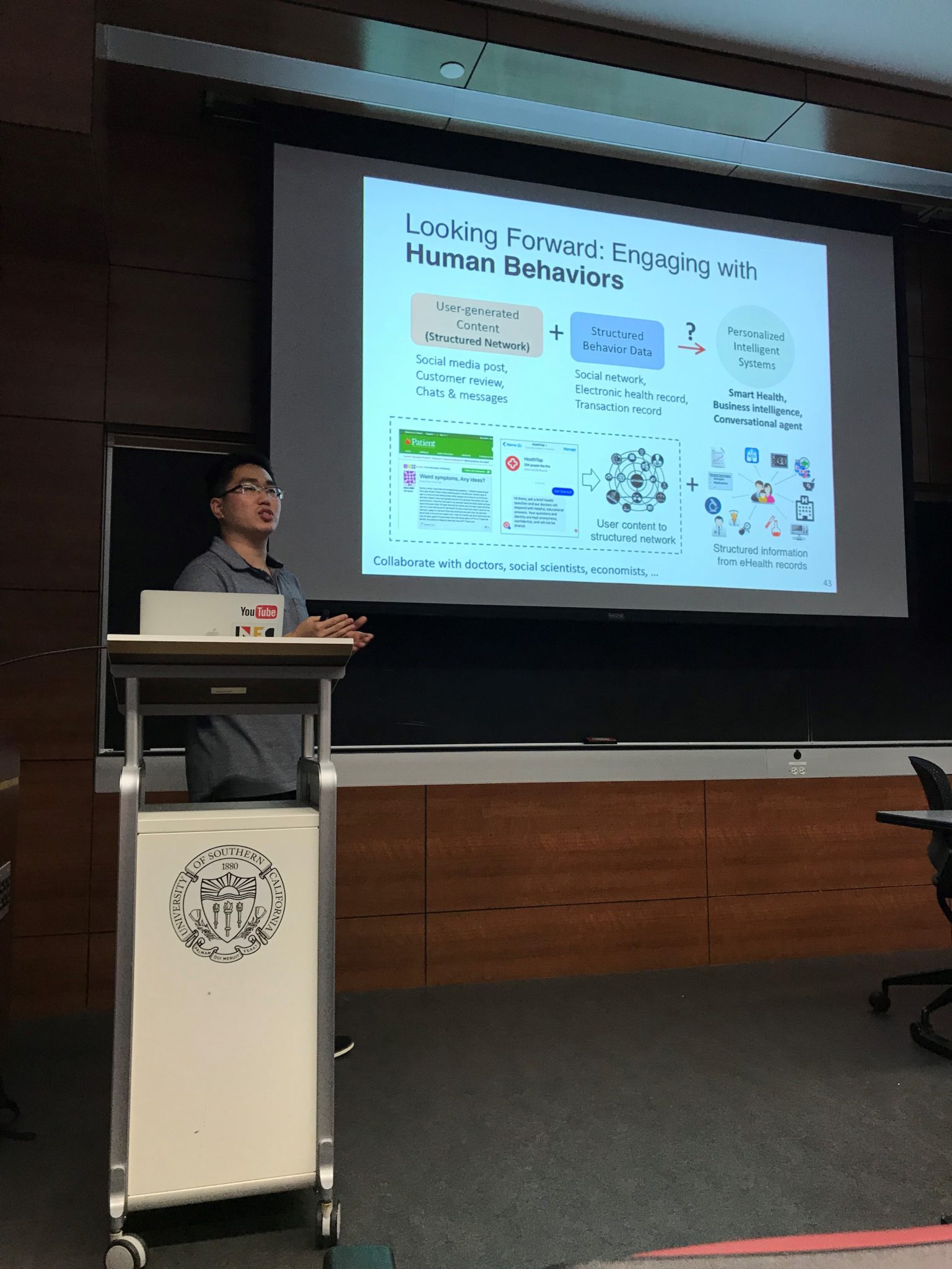

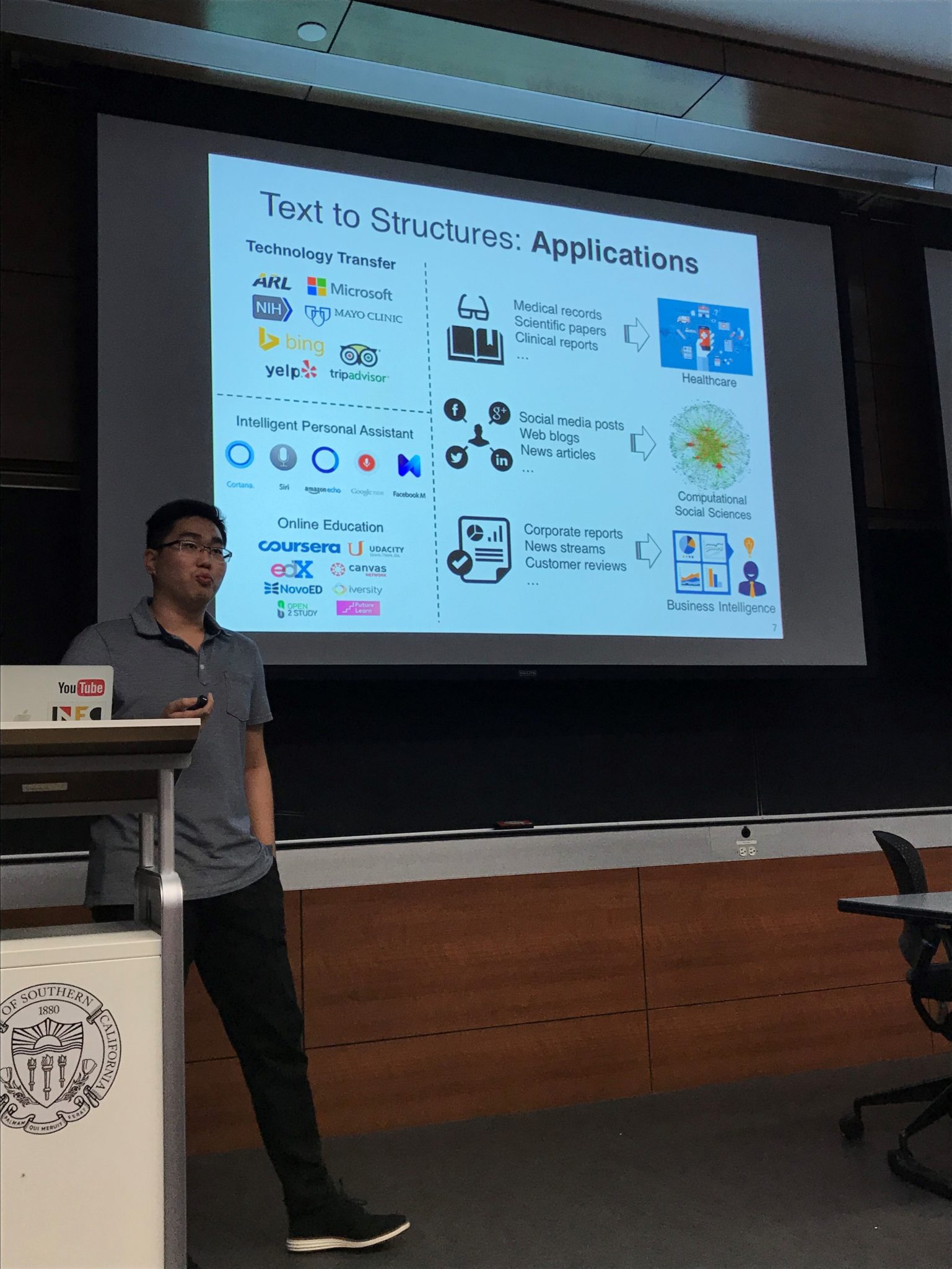

In the future, Dr. Ren sees himself expanding his research beyond text data to other types of knowledge graphs such as social networks, where human dynamics/behavioral data can inform relationships. His vision is to transform user generated content into networks with structured behavior to create maps of human dynamics and behavior. He also envisions using his analytics to power smart health and conversation agents by measuring from mobile sensors (check-ins, GPS, biosensors, and text signals.)

Dr. Ren is an Assistant Professor of Computer Science at USC and part-time Data Science Advisor at Snap. He runs the INK research lab, and is part of the USC Machine Learning Center, NLP Community@USC, and ISI Center on Knowledge Graphs. Previously, he was a visiting researcher at Stanford University and he received his PhD in CS at Illinois.